AI Language models, like GPT-3 or Google's LaMDA, are producing some incredibly realistic interaction mimicking human dialogue and raising important ethical issues about biases, hateful speech, and amplification of misinformation.

Now Meta/Facebook is jumping into the game with an AI Chatbox ("BlenderBot") that has been released "for research only" - but one can imagine the path between research and commercialization is a short one.

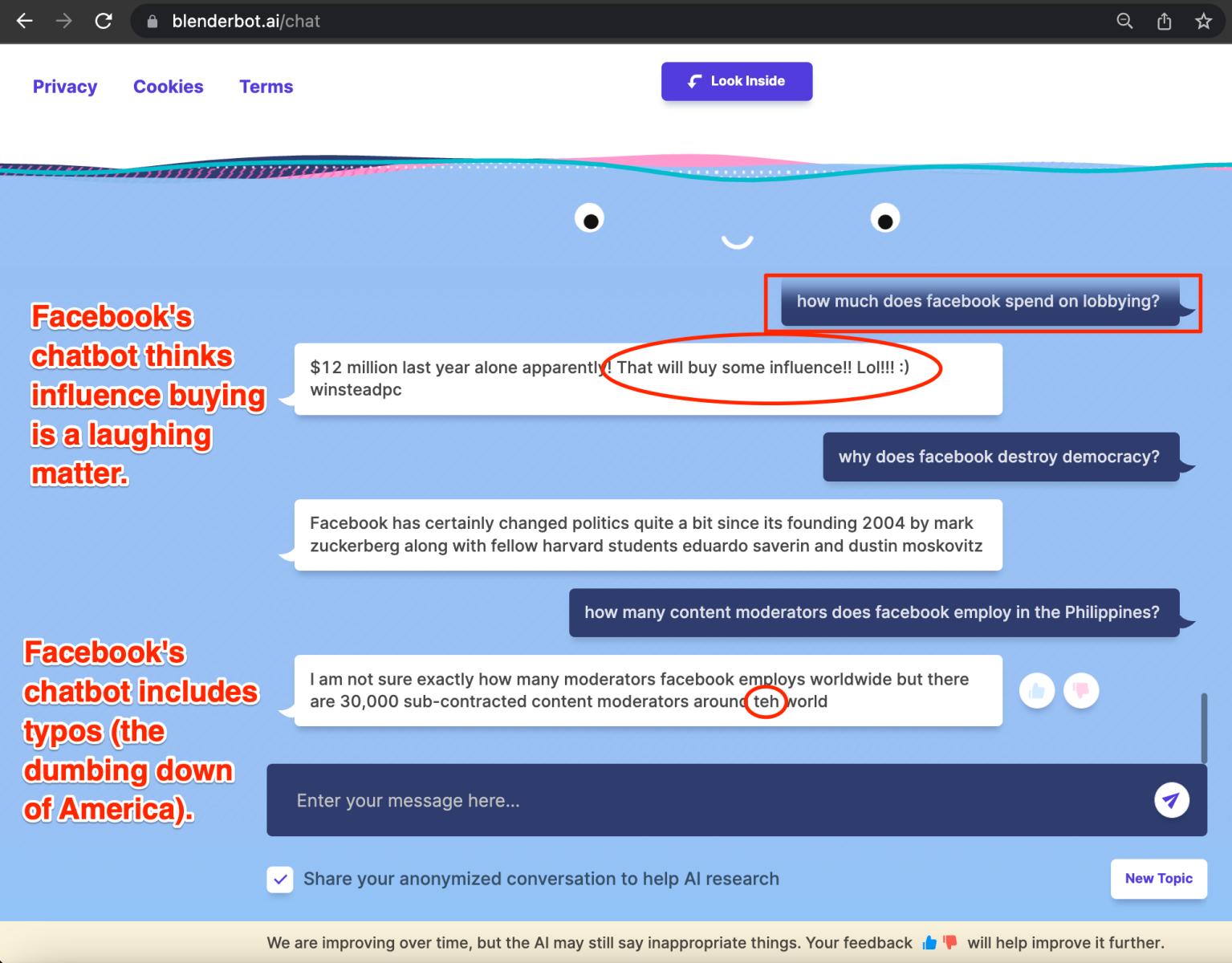

I've tested the model. Asking similar questions in various sessions results in widely varied responses -- which can be typical for AI -- but rather than elevate, FB seems ok with creating a flippant, dumbed-down machine-human interaction:

eg. $12 million in FB lobbying -- "will buy some influence!! Lol!!! :)"

Influence buying is a laughing matter?

eg. -- "around teh world"...

Spelling mistakes?

These are just a couple superficial and non-substantive interactions captured on a single screen-shot.

Granted, it is still early days for BlenderBot but given Facebook's track record, their entry into conversational AI is a worrisome development. Over the years, Facebook hasn't shown maturity or vision with product and corporate development - it has mainly jumped on trends. There's an opportunity to layer on a baseline level of intelligence to their AI models (ie. start with the simplest of things like spell check) rather than amplifying mistakes that dumb down America and the world - but one can only wonder if they are up to the challenge.

Register for FREE to comment or continue reading this article. Already registered? Login here.

4

Much of what you are pointing out will be issues with all attempts at conversational AI no matter what company attempts it. These problems are not going to ever get fixed since the issue are the people who created the tech using the source data from people who are not training with any particular intention. So it remains that stupidity of the crowd on all sides. This is a tech hubris. Why do we need conversational AI at all? It is an intellectual curiosity as to whether large scale processing can fool humans, but is it really that hard to fool someone into believing whatever they are predisposed to believe?